Query Language

To visualize data from Enapter Cloud you need to use a simple query language based on YAML.

This is what a typical request would be like:

telemetry:

- device: YOUR_DEVICE

attribute: YOUR_TELEMETRY

Basics

Remember a fictional hydrogen sensor that was introduced in the blueprint tutorial? Let us query its readings.

Device

First, we need to know the UCM ID of the sensor. You can find it on the device

page in the Enapter Cloud web interface. Usually UCM ID looks like this:

CB748D2BD5D044995A0FD76F551F1AABF7384858.

Telemetry

Second, we need to know the names of the metrics of the sensor. Blueprint

developers describe device telemetry in manifest.yml, so let us take a look

at the telemetry section of the sensor's manifest:

# Sensors data and internal device state, operational data

telemetry:

# Telemetry attribute reference name

h2_concentration:

# Attribute type, one of: float, integer, string

type: float

# Unit of measurement

unit: "%LEL"

display_name: H2 Concentration

The h2_concentration metric of type float is present there.

Exactly what we need.

The fictional H2 sensor has only one metric, but other devices are likely to have many. You can request any of the metrics as long as they are declared in the manifest and there is relevant data in the Enapter Cloud.

Result

Now we have all the components required to build the request:

telemetry:

- device: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute: h2_concentration

This should be enough for simple use cases, so you could stop here. If you would like to discover other supported features, keep reading.

Deeper Dive

The request that we have just built will actually be automatically extended to something like this:

telemetry:

- device: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute: h2_concentration

granularity: 1m

aggregation: auto

Let us try to make sense of this.

Granularity and Aggregation

Say, we want to request one day of sensor readings. How much data will the response contain?

| Time | Value |

|---|---|

| 2022-07-10 09:00:00 | 0.00005 |

| 2022-07-10 09:00:01 | 0.00006 |

| 2022-07-10 09:00:02 | 0.00006 |

| ... | ... |

| 2022-07-11 09:00:00 | 0.00005 |

| 2022-07-11 09:00:01 | 0.00005 |

| 2022-07-11 09:00:02 | 0.00004 |

If the sensor sends one data point per second, then there will be

24 * 60 * 60 = 86400 data points per day.

You probably do not need this many.

If the size of a point on a graph is 1px and you have a 4K display, your

computer will not be able to draw a horizontal line that is longer than 4096

points. Furthermore, people tend to watch several graphs side by side, so the

panels are usually 2 times narrower. It means that you could actually request

86400 / (4096 / 2) ~= 42 times less data points and still get a beautiful

graph. And we do not even count padding around panels!

To load the dashboards faster, we need to reduce the number of data processed and transferred over the network.

This can be done by:

- Grouping data points by some time interval (e.g.

1m); - Applying an aggregation function to each group (e.g.

max); - Returning only the result of aggregation.

| Time | Max Value out of 60 |

|---|---|

| 2022-07-10 09:00:00 | 0.00006 |

| 2022-07-10 09:01:00 | 0.00005 |

| 2022-07-10 09:02:00 | 0.00006 |

Such group of data points is called a time bucket.

granularity is a time interval which defines size of a time bucket. By

default such a value of granularity will be used, that makes a graph look

nice, but does not cause too many points to be requested.

aggregation is a function to apply to data points whose timestamps fall into

granularity time interval.

Currently supported aggregation functions

avg— calculate arithmetic mean;last— use last known value;auto— select eitheravgorlastdepending on the data type;min— find minimum value;max— find maximum value.

Gap Filling

Sometimes data sorted into time buckets can have gaps. This can happen if you have irregular sampling intervals, or you have experienced an outage of some sort. Gaps might make data analysis difficult, e.g. you cannot sum two timeseries if one of them contains a time bucket that has no data at all.

You can use gap filling to create additional rows of data in any gaps, ensuring that the returned rows are in chronological order, and contiguous.

Currently the only supported gap filling method is last observation carried

forward (locf).

LOCF

locf fills the gaps using the last observed data point value:

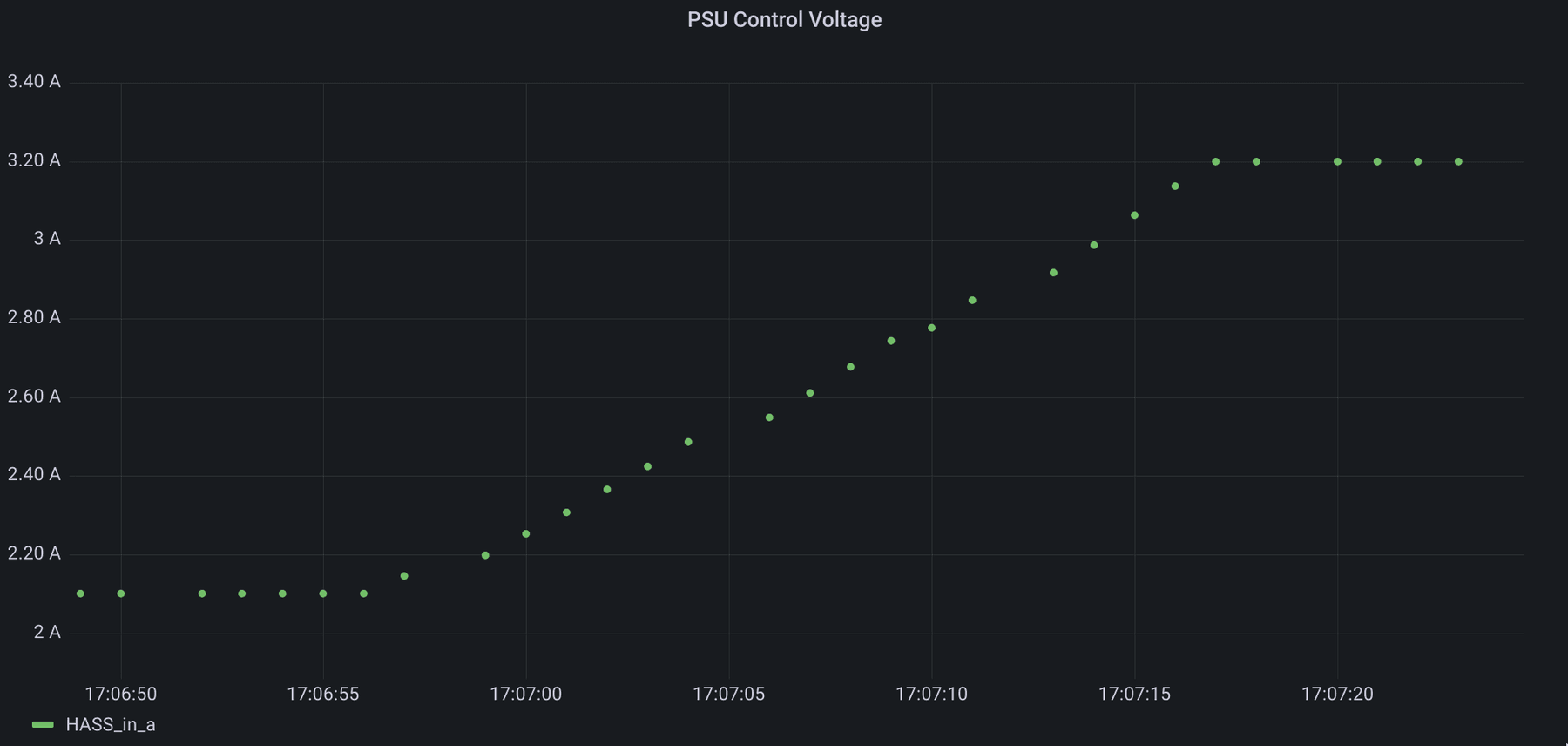

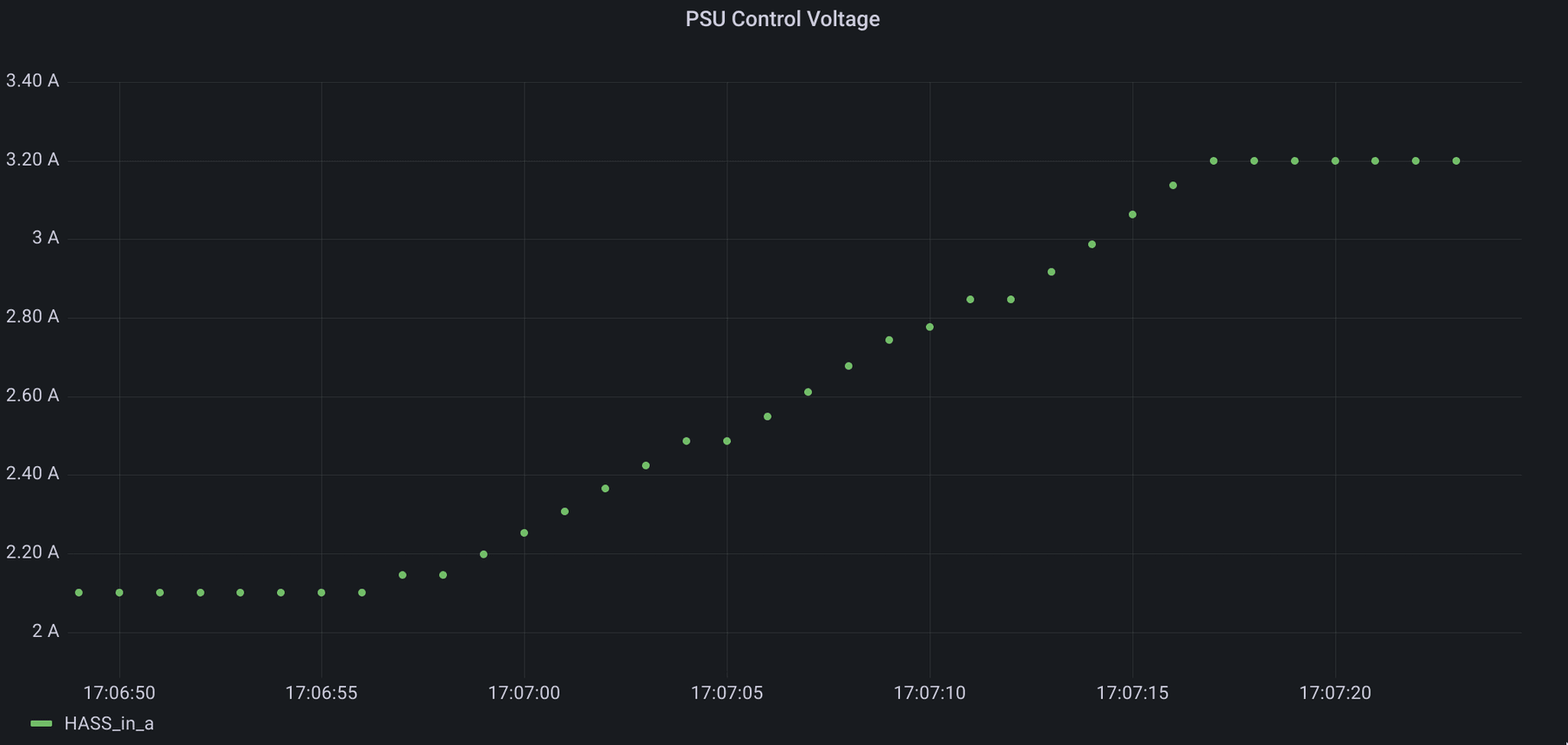

No LOCF

LOCF

Specify gap_filling.method in your query to enable locf:

telemetry:

- device: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute: h2_concentration

granularity: 1m

aggregation: auto

gap_filling:

method: locf

Because locf relies on having values before each time bucket to carry

forward, it might not have enough data to fill in a value for the first bucket

if it does not contain a value. This might happen e.g. if the start of time

range of the query falls in the middle of the gap.

To mitigate the empty first time bucket you might want to use the optional

look_around parameter which configures how far in the past to look for values

outside of the time range specified.

telemetry:

- device: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute: h2_concentration

granularity: 1m

aggregation: auto

gap_filling:

method: locf

look_around: 10m

Telemetry Selectors

A common data analysis task is to compare the values of several metrics.

You might have noticed that the top-level telemetry YAML key corresponds to a

list of items:

telemetry:

- device: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute: h2_concentration

Each item in this list is called a telemetry selector. A telemetry selector

describes which metrics from which devices should be requested. Here is how to

request h2_concentration of 3 devices:

telemetry:

- device: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute: h2_concentration

- device: A4048878F81FE8243FDC9A4D7A5098CFA7289BC8

attribute: h2_concentration

- device: 16EEDED569D71B6F941D39AF47C6B126A1AF07AE

attribute: h2_concentration

Device Selectors

It may be quite tedious to repeat the name of the metric, so a device selector comes to rescue.

The device YAML key in a telemetry selector corresponds to a device selector.

A device selector specifies which devices to request metrics for. You have

already used the simplest form of a device selector to select a device by its

UCM ID. Here is how you can select multiple devices at once:

telemetry:

- device:

- CB748D2BD5D044995A0FD76F551F1AABF7384858

- A4048878F81FE8243FDC9A4D7A5098CFA7289BC8

- 16EEDED569D71B6F941D39AF47C6B126A1AF07AE

attribute: h2_concentration

Attribute Selectors

The same logic is valid for requesting multiple metrics.

The attribute YAML key in a telemetry selector corresponds to an attribute

selector. An attribute selector specifies which metrics to request. You have

already used the simplest form of an attribute selector to select a metric by

its name. Here is how you can select multiple metrics at once:

telemetry:

- device: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute:

- h2_concentration

- amperage

- voltage

You can combine device and attribute selectors!

In total 9 metrics will be requested by the following query:

telemetry:

- device:

- CB748D2BD5D044995A0FD76F551F1AABF7384858

- A4048878F81FE8243FDC9A4D7A5098CFA7289BC8

- 16EEDED569D71B6F941D39AF47C6B126A1AF07AE

attribute:

- h2_concentration

- amperage

- voltage

Label Matchers

Each device and each telemetry attribute have a set of labels associated with them. Device and attribute selectors look for devices and attributes by matching values of these labels using predefined match operators.

Take a look at this example:

telemetry:

- device: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute: h2_concentration

This device selector may be considered equivalent to this one:

telemetry:

- device:

ucm_hardware_id:

is_equal_to: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute: h2_concentration

Which means: select all devices with UCM Hardware ID equal to

CB748D2BD5D044995A0FD76F551F1AABF7384858.

It might seem strange to have this long syntax when the short one does the

same. But it will make sense when the list of device labels expands.

E.g. device_type and site_name labels will allow you to select all

electrolysers on your site:

telemetry:

- device:

type:

is_equal_to: electrolyser

site_name:

is_equal_to: "My Site"

attribute: voltage

The same logic applies to the attribute selector:

telemetry:

- device:

ucm_hardware_id:

is_equal_to: CB748D2BD5D044995A0FD76F551F1AABF7384858

attribute:

name:

is_equal_to: h2_concentration

Which means: select all attributes with name equal to h2_concentration.

Currently supported device labels are:

ucm_hardware_id— hardware ID of the corresponding UCM;id— internal Enapter Platform device identifier;

Currently supported attribute labels are:

name— name of the attribute as in Blueprint.

Currently supported match operators are:

is_equal_to— match if the label value is exactly equal;matches_regexp— match if the label value matches the specified POSIX regular expression.

You may find the matches_regexp match operator useful to query a

bunch of similarly named entities.

matches_regexp match operator useful to query a

bunch of similarly named entities.E.g. if you have a device with attributes power_1, power_2 and power_3,

all of these attributes can be conveniently queried like that:

telemetry:

- device: 9A289B7C3E3EB74745E557820391D5D7E5A4FC3F

attribute:

name:

matches_regexp: power_\d